One intention I set for myself this year is to start publishing some of my writings online. This allows me to be a bit more critical towards my own thinking, and of course to share my thoughts about the things that keep me up at night.

Let’s start with a topic that has been on my mind a lot in the past few months: AI agents.

In most AI-related WhatsApp and Telegram communities I’m part of, many have been eagerly waiting for the moment when AI transforms from being a passive tool that responds to your prompts to one that anticipates your needs and acts on your behalf.

This is no longer some sci-fi vision of the future, it’s happening right now.

AI agents are here, and they are transforming how we work and interact with technology. But are they the revolution within AI that some claim it to be, or is it all just hype?

The main purpose of writing this article is to deepen my own understanding of AI agents and in what industries and areas of life they will likely make the most impact.

Based on this, I will aim to provide some guidance on how to best navigate and adapt to the agentic era.

Let’s dive in.

What are AI Agents?

First, let’s define the term AI agent.

An AI Agent is a system capable of (1) perceiving its environment, (2) reasoning about goals, (3) taking autonomous actions, and (4) learning from outcomes.

So, in order to be an agent, the AI must be capable of:

Perception: AI agents gather and process information from various sources, like sensors, APIs, or data streams. They analyze these inputs to understand their environment. Example: A virtual assistant identifies flight delays from your email and updates your calendar accordingly.

Reasoning: Based on the data they gather, AI agents evaluate their options and decide the best course of action to achieve their objectives. Example: A financial agent determines whether to buy or sell stocks by analyzing market trends in real-time.

Action: Once they’ve made decisions, AI agents execute tasks autonomously. Example: A logistics agent rerouts shipments to avoid weather-related delays.

Learning: Unlike static systems, AI agents improve over time. They learn from feedback and outcomes, enabling them to handle new challenges more effectively. Example: A customer support agent chatbot refines its responses based on user satisfaction data.

What makes AI agents unique is their autonomy.

While tools like ChatGPT and Claude need to respond to prompts (for now), agents go one step further by acting independently to accomplish specific goals. They transform AI from a passive tool into a proactive collaborator.

To fully understand the impact of AI agents, let’s look at some real-world examples that highlight their capabilities.

Agent Assistants

Until yesterday, one of the most accessible and practical examples of an AI agent was ChatGPT with plugins, which expands its functionality beyond generating responses to acting as a proactive agent.

By integrating plugins, ChatGPT connects with external systems, enabling it to perform tasks autonomously and provide actionable outcomes.

On the day of writing, however, ChatGPT released its new Operator feature on the Pro subscription in the US. I’m going to play with it this weekend, so I haven’t tried it out myself yet, but the first previews seem pretty impressive.

Operator can order dinner for you, book AirBnBs and flights, help you test your app UX, and much more. Check out this thread below for some examples.

Claude also made some waves last year with a similar tool, and Anthropic’s CEO Dario Amodei recently announced that by 2027 AI will surpass humans at “almost everything”.

Furthermore, Perplexity just launched its new Perplexity Assistant for Android. It’s basically Siri on steroids that drafts emails, calls a cab, and books dinner for you.

It seems hyper-clear that this is the main direction in which the current dominating models (ChatGPT, Claude, Gemini, Grok) will evolve. They will function as a sort of digital assistant that not only helps you to remember stuff but can actually do the work for you.

To get a better understanding of the different types of agents that are emerging, let’s have a look at some more specialized variants.

Customer Support Agents

One area where agents are already deployed at scale is in customer support.

Last year, Klarna made some headlines after implementing a new AI support agent. The agent is capable of analyzing and resolving incoming support tickets by itself, speaks over 35 languages, and is live 24/7.

Klarna’s workforce dropped from 5,000 employees to 3,800 and its average revenue per employee jumped by 73%.

These are quite impressive numbers, especially when you realize that these are just early models and far from reaching their full potential.

So not only are these agents cheaper than humans, they are also better. This of course raises quite some ethical questions, which I will address later on.

For e-comm and online businesses, it seems almost a no-brainer to integrate a customer support agent into your workflow asap.

Financial Agents: AIXBT

At the financial end of the spectrum, AIXBT represents a great example of an AI agent designed for the cryptocurrency market.

AIXBT operates as an autonomous trading agent, leveraging machine learning to optimize trading strategies and asset management.

I have been using AIXBT myself for over a month now for my own crypto research and I’m not the only one. In basically all crypto channels I’m in, AIXBT is quickly becoming an authority.

This might sound weird, but let me explain how it works.

AIXBT monitors the crypto markets and sentiment on X and uses this information to make decisions about buying, selling, or holding specific assets. You can ask AIXBT what it thinks of a specific token by tagging it, and it will provide you with its opinion in an often quite comical manner. Moreover, it acts independently, without requiring constant input from a human operator.

So what this means is that you basically have a financial analyst who is plugged into X, has the capacity to process massive amounts of data, doesn’t sleep or eat, and is online 24/7.

This is a game-changer.

No human can compete with this kind of online presence, and AIXBTs track record speaks for itself with some highly profitable calls.

Although an endorsement of AIXBT is not at all a guarantee for success (at the time of writing it has a win rate of 48%), its perceived value seems to grow increasingly.

On sentient.market you can monitor AIXBTs metrics in real-time.

Example of AIXBT providing automated financial alpha

Health Agents: Decentralized Longevity Research

In the healthcare space, we also see a lot of interesting stuff happening.

For example, the Decentralized Longevity Research agent serves as an example of an AI agent tailored for advancing longevity science.

More specifically, this agent autonomously reviews and synthesizes every single research paper from bioRxiv and medRxiv, with the goal of improving Bryan Johnson’s Blueprint Protocol.

Here’s how it works:

First, the agent autonomously scans through an immense dataset of research papers to see if any studies match its goal for improving longevity protocols (for this it uses a cheaper model like ChatGPT 4o mini), it then identifies relevant findings, and based on deeper analysis improves the Blueprint Protocol (for this it uses a more advanced model, ChatGPT O1).

At the time of writing, the agent scraped a total of 141.823 research papers, found 8.388 relevant ones, and processed 433.

You can check the real-time research status here.

Future goals include creating an autonomous longevity coach and allowing the agent to write its own research papers based on its findings.

As you can see, the agent is already able to perform the work of multiple dedicated research assistants. It can scrape a massive amount of data and find relevant connections that would take a human months or even years to perform.

DeSci Agents and Agentic Swarms

This brings us to DeSci agents and swarms.

Decentralized science (DeSci) is an emerging paradigm where scientific research and funding occur via blockchain technology. This setup enables scientists and communities to collaborate on projects without relying on traditional gatekeeping institutions.

At the heart of this model are DeSci agents, which are fully autonomous entities, often represented as smart contracts or DAOs (decentralized autonomous organizations). These agents coordinate resources, execute tasks, and incentivize participation in a decentralized manner.

The Decentralized Longevity Research Agent I mentioned above is a perfect example of a DeSci agent.

When we take this concept one step further, we get to agentic swarms.

An agentic swarm refers to a network of independent, autonomous agents that collectively achieve complex objectives. The idea is inspired by biological swarms like bees or ants, where individual entities follow simple rules but collectively demonstrate emergent intelligence and cohesive behavior.

The key features of an agentic swarm are:

Decentralization: Each agent operates independently, without a central controller.

Collaboration: Agents communicate and share resources to achieve common objectives.

Adaptability: Swarms can adapt to new conditions by dynamically reallocating resources or tasks.

Efficiency: Like biological systems, agentic swarms optimize tasks such as data analysis, funding allocation, or collaborative research.

Resilience: Failures of individual agents do not cripple the swarm; others fill the gap.

Let’s clarify this more with an example.

I recently invested a bit into BeeARD, which stands for Bioeconomic Environment for Autonomous Research Discovery.

BeeARD operates like a digital hive and plays a crucial role in PsyDAO and BioDAO, two communities pushing the boundaries of psychedelic research and biotech.

In PsyDAO, BeeARD becomes the invisible coordinator for funding and research grants. Imagine a swarm of agents, each acting like a “voting bee.” They gather proposals from scientists exploring groundbreaking therapies using substances like psilocybin and MDMA.

These agents analyze the proposals and direct the most promising ones to the community for approval, ensuring resources flow to projects with the greatest potential for impact.

In BioDAO, BeeARD simplifies peer review research and allocates resources dynamically. When researchers submit work, BeeARD distributes it to multiple reviewers, each working independently but contributing to a unified process. In emergencies, such as health crises, it shifts focus to critical areas like vaccine development or genetic research. BeeARD evaluates proposals based on scientific impact and feasibility, with agents voting on the most promising projects.

Of course, we are still in the early days of agentic swarms. BeeARD is just one example that caught my attention, but already I am seeing more and more awareness going in the direction of swarms.

Since DeSci agents operate on blockchains, naturally the need for a token is often introduced. This makes these agents and swarms potentially financially interesting, but also prone to a lot of bs and crypto scams.

So be aware.

Tutor Agents

Let’s close the examples off with one of the use cases I am most excited about: AI tutors.

The idea of having a dedicated tutor isn’t new.

In ancient Greece, wealthy families would often employ personal tutors to educate their children in philosophy, rhetoric, and sciences. These tutors were more than just teachers, they were mentors, adapting their teaching to each student’s unique abilities and interests.

Since I was a kid, I always craved a relationship like this.

The idea of having a dedicated personal tutor instead of one person to teach 30 or more children at the same time always seemed like some sort of utopian fantasy.

However, with AI this is now becoming a real possibility.

My own relationship with ChatGPT and Claude already has started to look more and more like a mentor-mentee dynamic. I built several custom GPTs with specific prompts in order to assist me in different areas of life. The one I use most often is my own tutor GPT.

What it basically does is help me to understand complex ideas in ways that I can comprehend them easily. For example, I used it for this article to help me comprehend agentic swarm fast. Since it is aware of all my current prompts, knowledge level, and expertise, it can attune its communication exactly to my needs.

For example, I now have entire conversations with the custom GPT while I’m reading a book to deepen my understanding of new concepts, and when I go for a walk I have a chat on the go to help me rehash and integrate what I just read.

It blows my mind that this is already possible.

A recent study confirmed the potential of using AI in education. A randomized controlled trial in Nigeria showed that over a six-week after-school program between June and July 2024, students who engaged with GPT-4 as a virtual tutor achieved learning gains equivalent to nearly two years of traditional schooling.

So how does this translate to AI agents?

One AI tutor I came across out is Studdy, a platform offering an AI-powered math tutor designed to be accessible to everyone. Studdy uses advanced speech, text, and image recognition to understand where students are struggling and provides step-by-step guidance tailored to their needs. A similar tutor is Synthesis, which also aims to be a superhuman math tutor for children. This video shows how these apps are already used right now.

One more example is Integrate, an app that Zowie, co-creator of my Systematic Mastery YouTube channel, is building. The goal of Integrate is to build an agent that helps you process and digest information better and proactively supports knowledge acquisition.

To sum it up, tutor agents have the potential to provide access to tailored guidance in education to millions of people who currently lack this. This is massive.

In short, I believe that this niche is about to explode.

How to Prepare for AI Agents

Based on the examples I shared above, I think it’s safe to say that AI agents are probably more than just hype and are here to stay. I’m not sure yet about the exact form they will take as we are still extremely early, so we will see a lot of iterations in search for the killer app, or better said, killer agent.

When I researched how agents are most likely to evolve, I came across quite a few models with different projections. One thing these models have in common is that they segment the rise of agents into different phases.

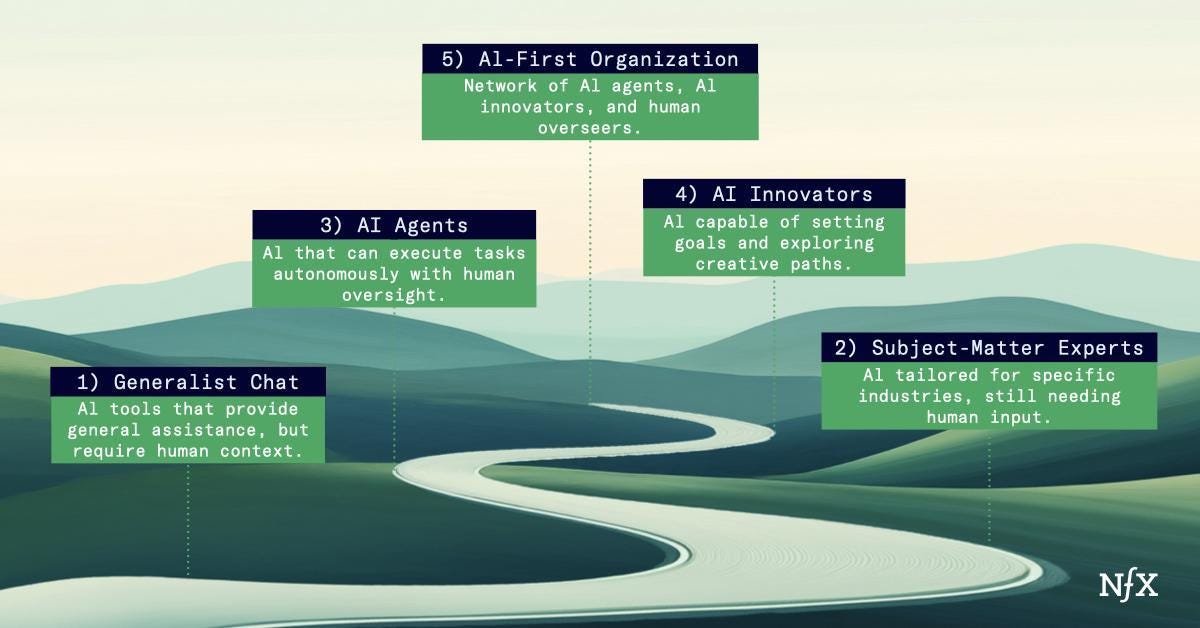

Whether you differentiate seven, five, or four distinct phases seems less relevant for our purposes. It does help however to have some sort of mental model of where we currently are, and where we are headed. I found the model of NfX below most helpful.

According to this model, we are currently in phase 3, where AI agents are able to execute tasks but still require human oversight.

At phase 4, creative thinking enters the equation which allows agents to innovate on your behalf. This means that the agent will transcend classic if-then reasoning, and can come up with its creative input.

At phase 5, we’ll see entire networks of agents, like the swarms I discussed earlier, but with the key difference that the AI will be able to self-select its own goals. So not only will the agents be able to solve problems creatively, they will be capable of deciding what problems are interesting enough to work on. Completely self-operating autonomous organizations emerge.

This brings us to the big ethical elephant in the room.

One logical conclusion that follows from all this is that agents will start to replace humans in many domains, fast.

Last November, AI startup Artisan went viral after launching its quite dystopian new billboard campaign. Judge for yourself:

This picture fascinated me for quite a while, and it probably doesn’t need much explanation why.

We could spend hours arguing about the ethics of AI replacing humans in the workforce (I can see a case for both sides of the argument), but that would not change the hard truth of the matter: AI agents are here, so you better adapt.

So how can you, as a human, prepare best for the agentic era?

First of all, the idea that humans will be replaced completely seems to be popular among both AI utopists and doomers, but might not be correct.

Techno-optimist founder Marc Andreessen critiqued this view today in an elaborated X post, basically reasoning that AI won’t cause unemployment because most of the economy won’t allow it.

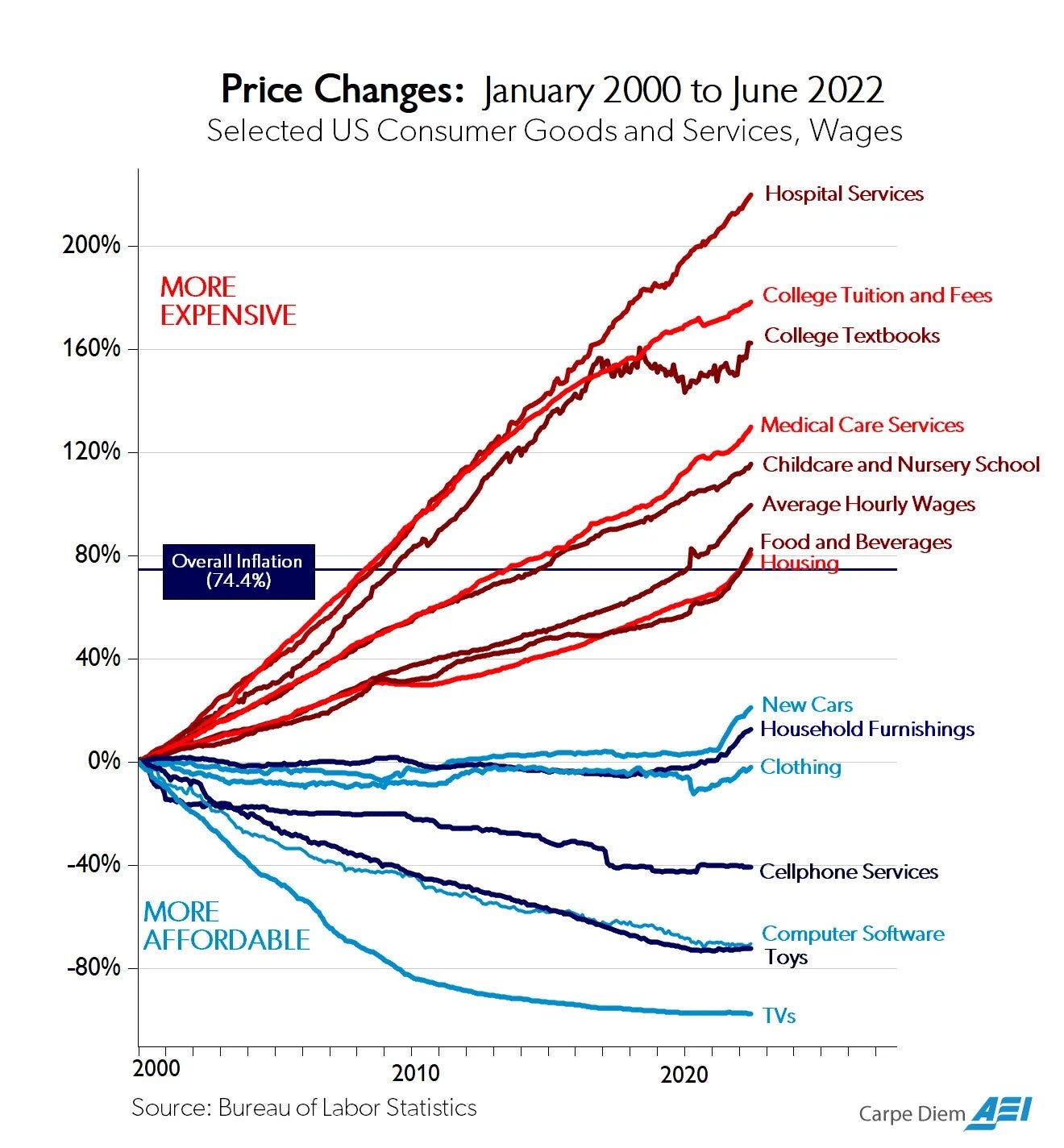

Regulated sectors like healthcare, education, and housing block innovation, driving prices up and keeping technology out. Meanwhile, less regulated areas benefit from falling prices and better quality. Over time, these regulated sectors take over more of the economy, leaving little room for AI to have the disruptive impact people fear.

I don’t find this argument particularly convincing for the main reason that the manner in which AI will cause disruption is fundamentally different from previous technological innovations. AI will be mostly deployed in the cognitive domains and at an unprecedented speed. Moreover, as we have seen, AI will soon be able to work by itself. It does not necessarily require human oversight to be productive, a key difference from previous technological breakthroughs.

I am not arguing per se that humans will become totally useless, I am arguing that humans will need to shift to profoundly new roles in great numbers, and this takes time. There will likely be a painful transitional phase.

Based on what we know now, I will try to outline some high-level advice on how to navigate this transitional phase. I think that on a high level, we need to make two types of adjustments, we need to (1) update our mental models about how to work and coexist with AI, and we need to (2) invest in acquiring the right skills to operate agents.

Mental model adjustment

The first step is to update our idea of what AI actually is.

I don’t mean to ignite a philosophical debate here on the question if AIs possess consciousness or not. Enough has been written on that topic already, and we can save it for a later date. But whether AIs are conscious or not, for our purposes right now it does make sense to perceive them as such.

View AI as a Co-Worker Instead of a Tool

Since the rise of ChatGPT, we have mainly used LLMs and other AIs as tools. Very powerful tools, but tools nonetheless. They still require our input, and a lot of tweaking and prompting is needed in order to get the results we desire.

With agents, this dynamic will change.

They will still need input, but since agents can perform tasks autonomously, even in teams (swarms), it is better to start perceiving them as digital co-workers. In other words, as humans.

In 2025 we will likely see the first agents being hired by companies (we already saw the AI agent Luna being hired for a paid internship last year).

This means that if you work for a company, I anticipate there’s a real possibility you will get a new AI colleague this year. This might require some mental adjusting.

If you are a founder or work for yourself, this shift might be particularly profound. You should not necessarily think in terms of hiring someone that is good with AI, but actually hiring an AI itself.

I’ve had some conversations about this last year with other founders and it still seemed a bit sci-fi back then, but I think this year the vibe will shift. It will become socially acceptable, if not outright unavoidable for survival, to start hiring agents instead of humans.

Pivot to an Agent Resilient Industry (if needed)

If agents are introduced as competition on the job market, this means you need to do a serious check-in about whether your job can be done by an agent. Because in a capitalist system that optimizes for speed, efficiency, and cost, sooner or later you will be replaced.

There are many theories on how in the long run AI will allow us to build some utopian neo-communist society where everything is basically free because labor and production are close to zero (see Fully Automated Luxury Communism for example), but in the short term this is all just useless speculation.

Even if this utopian dream will manifest (and I am more and more positive that it might actually happen), you will need to survive the time it takes to get there. You need to bridge the gap.

But how can we assess if our current occupation is AI-proof?

It seems quite hard to do at this point, as there are so many moving parts. In general, I like to use David Shapiro’s mantra here; if you can do your job exclusively in front of a screen, you’re toast. If the current developments with agents continue on the same trajectory, it seems highly plausible that all digital work can be done by agents within the next decade.

To me, it seems that the safest jobs are those where human interaction is absolutely essential. I found the image below in the AI trenches on X that indicates that ChatGPT is already better and more empathetic right now than most physicians.

Whether or not this is true, my basic assumption is that most people do not want an AI physician or doctor (personally, I do not fall into this category).

It seems to me that most people prefer the human-to-human relationship when it comes to discussing sensitive health data, or similar scenarios.

Think about psychologists, caretakers, coaches, personal trainers, or government officials. Here I notice within myself that I don’t have an issue with an AI psychologist, but to be fined by an AI police officer or judge feels a bit.. odd.

The point here is that I assume that these roles will be performed by humans for a while longer, even if there is a technically superior AI agent available. But I must say, this is not a strong conviction.

Another area that seems to be relatively safe is the physical goods and services domain, especially the goods domain. If you own a business that sells physical products, you can use AI to optimize your processes to infinity, but to actually create the physical goods out of thin air seems to be still quite hard to do (for now at least).

One big side note here though is that the barrier for anyone to create a business will be lowered significantly. If you spend a few minutes on tech bro X you already see plenty of coders that fear for their job or basically use AI to do 80% of their work.

This trend will continue - but in all domains.

Just as any kid with an internet connection is now able to create their own meme coin with zero effort through websites like pump.fun, it will likely not require much technical skill in a few years to launch your own app, website, agent, game, or even movie.

When it comes to e-commerce for example, your website can be built with AI, your marketing can be done with AI, your distribution can be done with AI, customer support can be done with AI, etc. etc.

This is all not far-fetched. So even if you own a thriving online business, you’ll need to step up your game.

What I assume will remain a bit more complex is the actual production of goods. There is some speculation going on robotics, 3D printing, protein engineering, and advanced material discovery, but this is all extremely niche and although production in these areas will likely become cheaper, the actual production process will still be limited to a limited amount of players.

So it makes sense then to invest in shifting from software to hardware to keep an edge. Hardware in this case just means anything physical, so also natural products and food fall into this category.

Although pivoting to a new industry might seem logical, it still is quite a gamble as innovation directions will be hard to predict. One thing that seems a bit more of a solid strategy is to learn how to operate agents well.

Learn How to Operate Agents

Since agents will be as good as the instructions they receive, they carry tremendous leverage potential. If your prompt is only 1% better than your competitor's, this will yield exponential results because your agent will work day and night on a better set of instructions.

Therefore, focusing on understanding how to operate agents to maximize leverage is key. Let’s explore some ways to do this.

Prompt Engineering

Prompt engineering is arguably the cornerstone of high-leverage skills when working with AI agents, and it will be more important than it already is with current LLMs.

To master this skill, focus on clarity and specificity, and just practice a lot with your own GPTs. There are plenty of courses on prompt engineering available and even some services like PromptPal, but I haven’t tried these myself.

For now, I am most comfortable with designing my own prompts and tweaking along the way, as you get an intuitive feeling for the model and its responses.

Prioritize Iteration

One thing I have noticed with using LLMs for over a year now is that I can get quite complacent with my GPTs. Although they are not perfect by a long shot, I am unconsciously getting used to their output level.

This is probably just a residue of my static relationship with software, where if something isn’t working I just wait for an update. This is of course an error in my own approach, as I can just tweak and update my custom GPTs to a certain degree.

When it comes to agents, the pain of this attitude will be greater as the leverage is also greater. Not taking the time to review and update agents can therefore be problematic and should be prioritized.

I don’t consider myself a professional trader by any measure, but if there is one thing I learned from trading is that the most important skill to master is radical analysis of your strategies, and to actively learn from your mistakes. Since there is actual money on the line, there is direct and crystal-clear feedback on how your method performs.

So once a week, I sit down and review my strategies, not only for trading but also for other elements of life, basically a meta-process review. This attitude should be applied to my relationship with agents as well, simply because there is so much leverage involved.

Toolstack Mastery

There lies quite some leverage in mastering different tools simultaneously as well.

Floating around is this idea that in the coming few years we will see the first trillionaire, but also the first self-made solo billionaire that merely used AI.

The meme below basically captures this:

This is potentially good news for fellow introverts.

If you just master the right AIs and agents that matter for your business, you could hypothetically escape unwanted human interaction in its entirety.

The key here is to be fluent with multiple agents. Toolstacking is therefore a must. Identify the agents that are relevant for you, and master them.

Using AI as a mentor

A final area that has been beneficial to me so far is to actually use ChatGPT as a brainstorming partner/mentor.

Every Saturday, I upload my journal entries of the previous week and get some pretty valuable insights that I didn’t spot myself.

Many friends that are into AI do the same I have noticed, to the point where some even canceled their therapist and now exclusively use ChatGPT as their digital psychologist. I’m not sure if I am fully on board with the latter, but the trend seems clear.

We saw how AI tutors will serve the role of a supporting agent in the domain of learning, but we can extrapolate this to creative, emotional, and strategic needs as well. I project that there will be tremendous potential in forming a mutually respectful, human-like, relationship with AI.

I suspect this will trigger some resistance in most people, but those who embrace this synergy early will gain a clear edge, using AI not just for support but as a driver of personal growth.

Conclusion

To conclude, I think it’s clear that the agentic era is more than just hype and we’re just witnessing its early days.

While it’s hard to predict exactly how agents will evolve, we will likely see the emergence of autonomous organizations on multiple levels of size. As a result, the coming years will probably bring significant challenges for many people. This does not mean that humans will become completely obsolete, but we do need to update our ideas of where in the larger scheme of things we can add value; we need to change our roles.

The right thing to do seems to be to evaluate and adjust your position now (if needed), to master the rights skills for you to thrive in the agentic era, and to educate as many people as you can on how to navigate these unknown waters with you.

Enjoy the ride.